Spectral Feature Selection for Data Mining: Unlocking Hidden Patterns with Mathematical Precision

Data mining, the process of uncovering insightful patterns and knowledge from vast datasets, has revolutionized decision-making in countless industries. At the heart of data mining lies feature selection, a critical step that helps reduce data dimensionality and improve model performance. Spectral feature selection stands out as a powerful technique that leverages mathematical principles to identify the most informative features, empowering data scientists to extract maximum value from their datasets.

The Role of Spectral Feature Selection

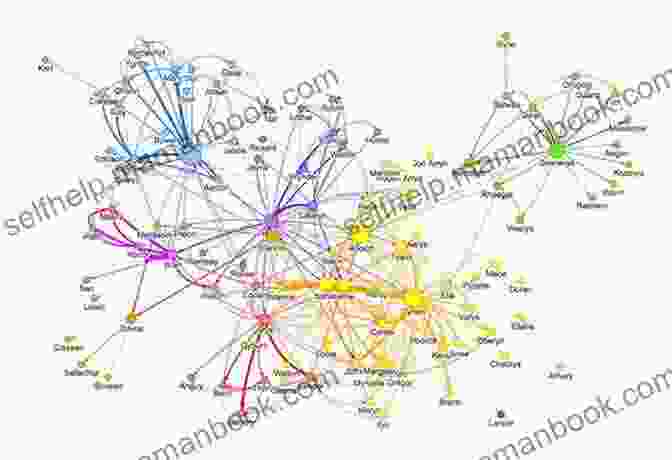

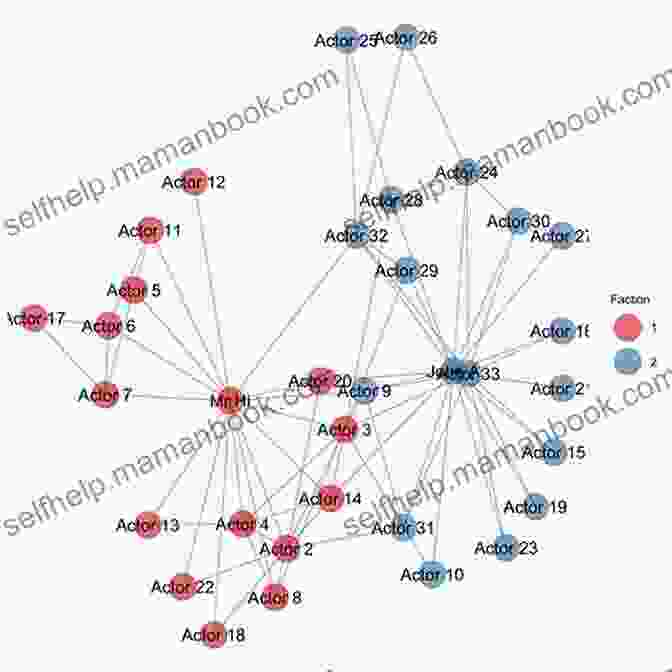

Spectral feature selection harnesses the mathematical concepts of graph theory and linear algebra to analyze the relationships between data points and features. It constructs a graph where nodes represent data points and edges represent the similarity between them. By decomposing the graph's Laplacian matrix, spectral feature selection identifies the eigenvectors that correspond to the lowest eigenvalues. These eigenvectors represent the most discriminative features that effectively separate different classes or clusters in the data.

4.4 out of 5

| Language | : | English |

| File size | : | 14219 KB |

| Screen Reader | : | Supported |

| Print length | : | 220 pages |

| X-Ray for textbooks | : | Enabled |

In essence, spectral feature selection captures the global structure of the data and identifies the features that best explain the underlying patterns. It offers several advantages over traditional feature selection methods:

- Preserves Data Structure: Spectral feature selection considers the relationships between data points, preserving the inherent structure and dependencies within the data.

- Handles Non-Linear Data: Unlike many feature selection techniques, spectral feature selection can effectively handle non-linear relationships and complex data distributions.

- Robust to Noise: By leveraging mathematical principles, spectral feature selection is inherently robust to noise and outliers, leading to more stable and reliable feature selection results.

Applications of Spectral Feature Selection

Spectral feature selection has found widespread applications in various domains, including:

- Image Processing: Selecting salient features for image classification, object detection, and face recognition.

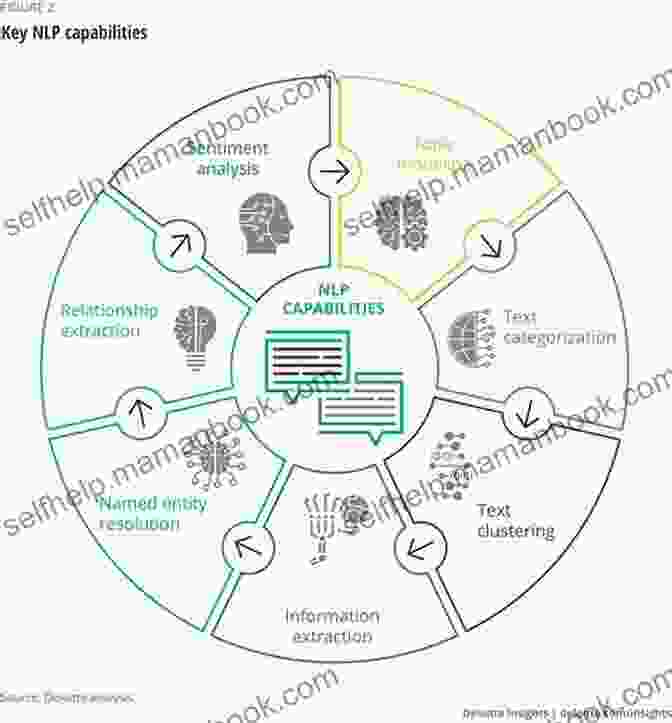

- Natural Language Processing: Identifying important words and phrases for text categorization, sentiment analysis, and machine translation.

- Medical Diagnosis: Discovering biomarkers and disease-specific features for early detection and personalized treatment.

- Cybersecurity: Analyzing network traffic patterns to detect anomalies, identify malicious actors, and protect against cyber threats.

- Financial Analysis: Selecting financial indicators for stock price prediction, credit risk assessment, and portfolio optimization.

Challenges and Future Directions

Despite its strengths, spectral feature selection also faces certain challenges:

- Computational Complexity: Decomposing the Laplacian matrix can be computationally expensive for large datasets.

- Parameter Tuning: Selecting the appropriate number of features and regularization parameters requires careful tuning.

- Integration with Machine Learning Models: Integrating spectral feature selection into machine learning models can be non-trivial, potentially affecting model interpretability and performance.

Current research efforts are addressing these challenges by developing more efficient algorithms, optimizing parameter selection, and exploring novel approaches to integrate spectral feature selection with machine learning models. Future advancements in spectral feature selection promise to further enhance its capabilities and broaden its applications.

Spectral feature selection emerges as a powerful and versatile technique for data mining, empowering data scientists to identify the most informative features and extract maximum value from their datasets. By leveraging mathematical principles, it captures the global structure of data, handles non-linear relationships, and offers robustness to noise. As research continues to address existing challenges and explore new directions, spectral feature selection will undoubtedly play an increasingly vital role in driving data-driven decision-making and unlocking the full potential of data mining.

References

- Ng, A. Y., Jordan, M. I., & Weiss, Y. (2002). On spectral clustering: Analysis and an algorithm. Advances in Neural Information Processing Systems, 14(1),849-856.

- Belkin, M., & Niyogi, P. (2002). Laplacian eigenmaps for dimensionality reduction and data representation. Neural Computation, 15(6),1373-1396.

- Shi, J., & Malik, J. (2000). Normalized cuts and image segmentation. IEEE Transactions on Pattern Analysis and Machine Intelligence, 22(8),888-905.

- Zhu, X., Ghahramani, Z., & Lafferty, J. (2003). Semi-supervised learning using Gaussian fields and harmonic functions. Machine Learning, 50(3),259-291.

- Alpert, C. J., & Yao, S. Z. (1999). Spectral partitioning: Optimal partitioning of finite graphs. SIAM Review, 41(3),479-483.

Image Alt Attributes:

4.4 out of 5

| Language | : | English |

| File size | : | 14219 KB |

| Screen Reader | : | Supported |

| Print length | : | 220 pages |

| X-Ray for textbooks | : | Enabled |

Do you want to contribute by writing guest posts on this blog?

Please contact us and send us a resume of previous articles that you have written.

Top Book

Top Book Novel

Novel Fiction

Fiction Nonfiction

Nonfiction Literature

Literature Paperback

Paperback Hardcover

Hardcover E-book

E-book Audiobook

Audiobook Bestseller

Bestseller Classic

Classic Mystery

Mystery Thriller

Thriller Romance

Romance Fantasy

Fantasy Science Fiction

Science Fiction Biography

Biography Memoir

Memoir Autobiography

Autobiography Poetry

Poetry Drama

Drama Historical Fiction

Historical Fiction Self-help

Self-help Young Adult

Young Adult Childrens Books

Childrens Books Graphic Novel

Graphic Novel Anthology

Anthology Series

Series Encyclopedia

Encyclopedia Reference

Reference Guidebook

Guidebook Textbook

Textbook Workbook

Workbook Journal

Journal Diary

Diary Manuscript

Manuscript Folio

Folio Pulp Fiction

Pulp Fiction Short Stories

Short Stories Fairy Tales

Fairy Tales Fables

Fables Mythology

Mythology Philosophy

Philosophy Religion

Religion Spirituality

Spirituality Essays

Essays Critique

Critique Commentary

Commentary Glossary

Glossary Bibliography

Bibliography Index

Index Table of Contents

Table of Contents Preface

Preface Introduction

Introduction Foreword

Foreword Afterword

Afterword Appendices

Appendices Annotations

Annotations Footnotes

Footnotes Epilogue

Epilogue Prologue

Prologue Brynn Tannehill

Brynn Tannehill Michael Chaskalson

Michael Chaskalson Julia Heaberlin

Julia Heaberlin Rachel Menard

Rachel Menard Shawn D Guiont

Shawn D Guiont Andy Dunn

Andy Dunn F T Rossi

F T Rossi Paul Griner

Paul Griner Frank Mccourt

Frank Mccourt Hemang Doshi

Hemang Doshi Stu Jones

Stu Jones Lana Popovic

Lana Popovic Malcolm Mackenzie

Malcolm Mackenzie Amanda M Czerniawski

Amanda M Czerniawski Emma Robinson

Emma Robinson Sherri Granato

Sherri Granato Naomi Shihab Nye

Naomi Shihab Nye Susanne Hope

Susanne Hope Zachary Mahnke

Zachary Mahnke Julia Drosten

Julia Drosten

Light bulbAdvertise smarter! Our strategic ad space ensures maximum exposure. Reserve your spot today!

Terence NelsonEpigrams: Modern Library Classics by Martial: A Literary Masterpiece of Wit...

Terence NelsonEpigrams: Modern Library Classics by Martial: A Literary Masterpiece of Wit... Cody RussellFollow ·17.4k

Cody RussellFollow ·17.4k Jayson PowellFollow ·9.9k

Jayson PowellFollow ·9.9k Russell MitchellFollow ·9.4k

Russell MitchellFollow ·9.4k Julio Ramón RibeyroFollow ·12k

Julio Ramón RibeyroFollow ·12k Patrick RothfussFollow ·12.3k

Patrick RothfussFollow ·12.3k Forrest ReedFollow ·19.9k

Forrest ReedFollow ·19.9k Anton ChekhovFollow ·4.1k

Anton ChekhovFollow ·4.1k Jack PowellFollow ·8.2k

Jack PowellFollow ·8.2k

Boris Pasternak

Boris PasternakThe Misted Mirror: Mindfulness for Schools and...

What is The Misted...

Holden Bell

Holden BellEmbark on Thrilling Adventures in the Uncharted Depths of...

Unveiling the Enchanting...

Seth Hayes

Seth HayesDelphi Complete Works of Lucan: Illustrated Delphi...

This meticulously edited...

Jackson Hayes

Jackson HayesThe Enigmatic Cat Burglar: Unraveling the Intriguing...

In the annals of crime, the name Bernie...

Quentin Powell

Quentin PowellAligned With The Cisa Review Manual 2024 To Help You...

The CISA Review Manual 2024 is the most...

Austin Ford

Austin FordUnlocking Revenue Potential: A Comprehensive Business...

In today's digital...

4.4 out of 5

| Language | : | English |

| File size | : | 14219 KB |

| Screen Reader | : | Supported |

| Print length | : | 220 pages |

| X-Ray for textbooks | : | Enabled |